| Previous

Page |

PCLinuxOS

Magazine |

PCLinuxOS |

Article List |

Disclaimer |

Next Page |

The Social Dilemma |

|

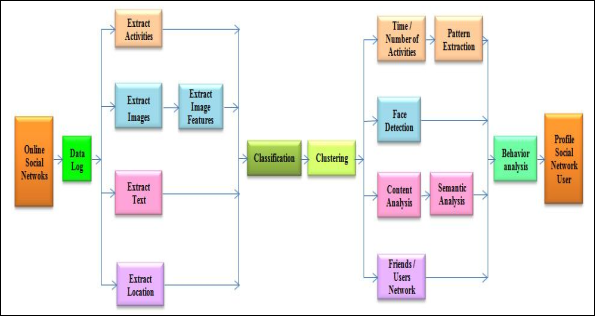

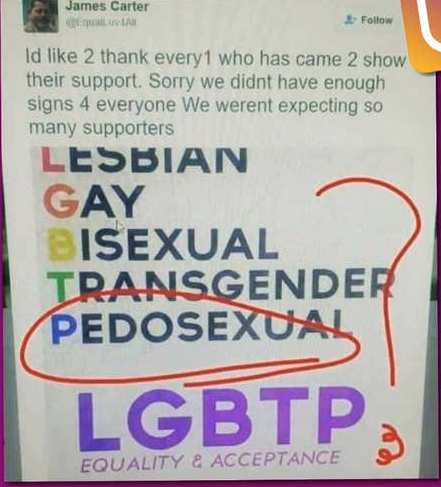

by Agent Smith (Alessandro Ebersol)  The documentary "The Social Dilemma", which premiered on Netflix in September, 2009, interviews technology experts from Silicon Valley to show how social networks are reprogramming civilization. Former employees of companies like Google, Facebook, Twitter, Instagram and Pinterest tell how the executives of these companies not only know this, but also manipulate the algorithms to induce behavior in people. Social Dilemma? What Do I Care? I have already written about how Google constantly monitors its users, uses so-called surveillance capitalism to earn a lot, and profiles everyone, saves information about them in databases, often in other countries, which the laws of the user's native country, are not valid.  But it's not only that. Being connected, nowadays, in some cases even becomes a matter of survival, since there are people who use Whatsapp as a work tool. And, as for Whatsapp, how many times did two people talk, about, for example, dog food, and then, when opening Facebook, to have dog food advertisements appearing on the side bars? Magic? Does Facebook read my thoughts? However, it's nothing like that. 24/7 surveillance, even when not apparently connected, it is always listening. And, thanks to the entire telecommunications, cell phone and internet infrastructure, it is not just a passive action to monitor the user. No. The whole apparatus of social/communication networks and applications is actively used to influence its audience. If They Were Just Targeted Advertisements, It Wouldn't Be So Bad. No, of course not. Open your email and find hundreds of advertisements for Viagra, increase your penis size, find singles in your area, etc. It gets very annoying sometimes. But, one can survive that. With some care and spam filters, Ad blockers, and other tools, one can avoid these advertisements and interact on the internet. But, no. It is worse, and it gets much worse. But, how? Well, I'll look at how social networks profile their users and use targeted messages. The figure below illustrates the architecture of the user profile. There are several ways in which a user can be profiled, which depends on the application as well. The general user profiling process is as follows: Raw data from online social networks is collected and analyzed in relation to various aspects such as: activities, images, text data and geographic location.  In this phase, the data relating to the number of activities performed, the images posted or profile photos, data presented by user and the user's geographic location are extracted separately. With respect to the images, the details of the image and its features are extracted using appropriate algorithms. The respective data is analyzed and the related/unrelated data is classified using classification mechanisms. Then, based on the classified data, users are grouped into distinct groups with group name and functionality.

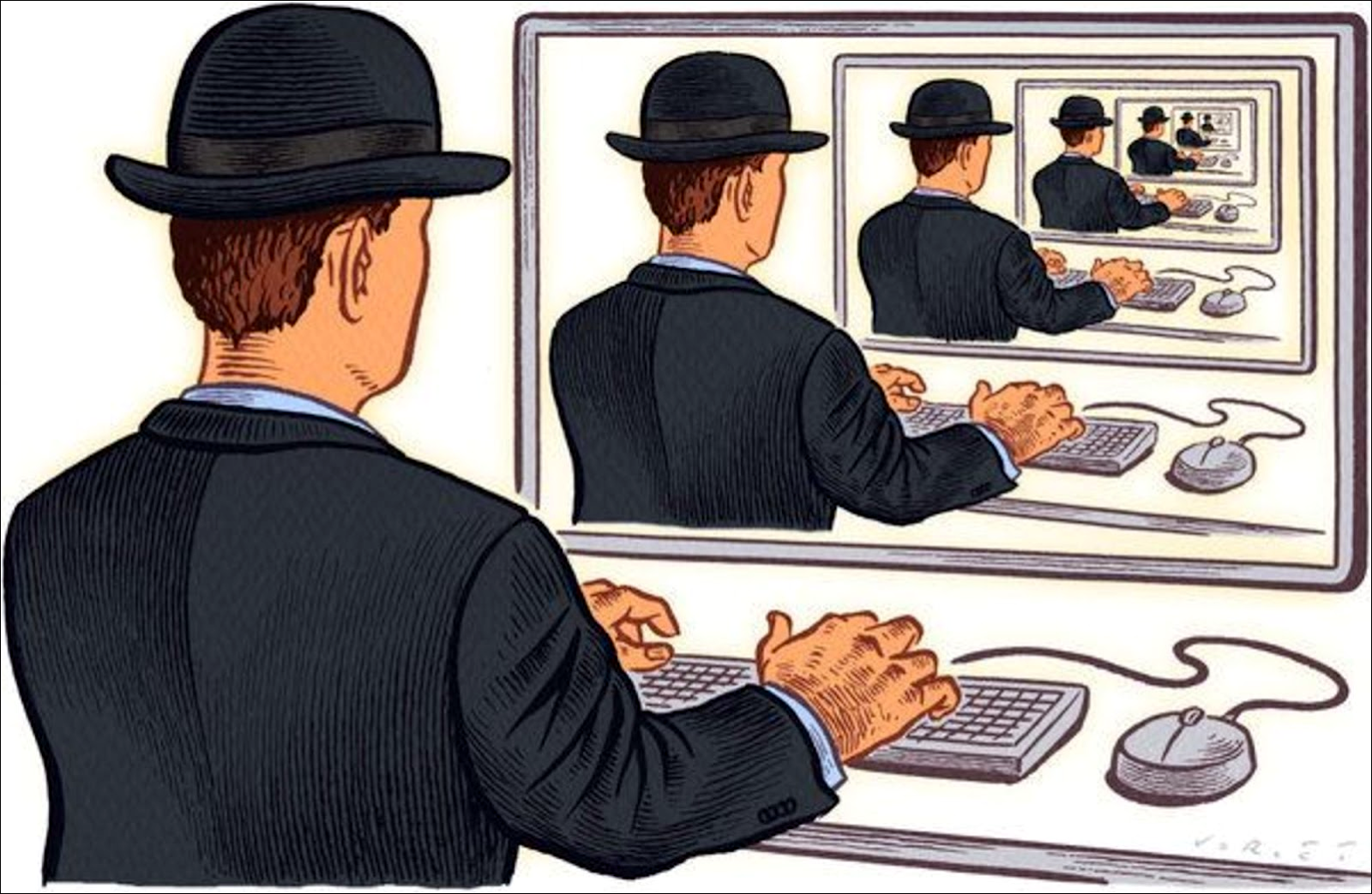

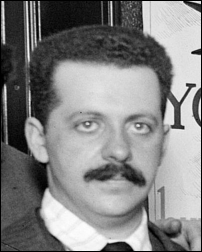

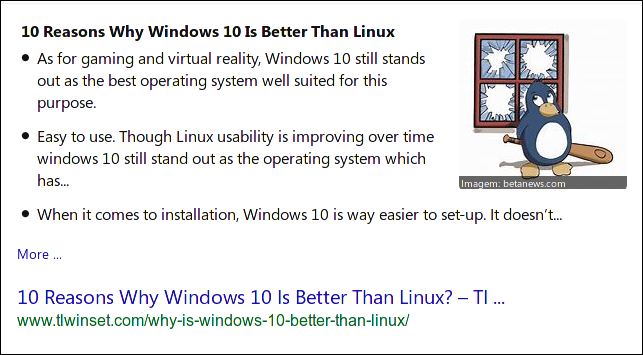

After obtaining the details of the user and their related groups, the number, type and pattern of activities for each user is extracted. Based on the largest number of activities and the influence exerted on others by a user, the user's behavior is obtained. Thus, when analyzing this pattern of behavior on online social networks, the user's profile is defined. The Internet Has Become A Giant Echo Chamber ...  Thanks to profiling and classification, social networks have become giant echo chambers, real bubbles that keep people away instead of bringing them closer, and create an illusion about the perceived reality that is very powerful and real. I already had the opportunity, using YouTube, to access a channel of extremist ideas, in political terms, with a YouTuber who had a strong speech against his opponents, and often insulted people who did not share his opinion. I will not mention the channel or YouTuber, since he does not deserve advertising or any other means to spread his extremist views. And, I accessed his channel a long time ago when it was a small channel, and when videos from his channel appeared on my timeline again, I chose to ignore them. It was content that is not important to me. Okay, his videos never appeared on my timeline again. Some time passed, about two years, more or less, and I was curious: Did that guy's channel close? No more videos of him ever appeared on my timeline, and I thought he had shut down his channel and given up. I looked for the channel, searching in YouTube, and there was his channel, firm and strong, with over 100 thousand subscribers. And I was speechless: Yeah, being rude and spreading hate speech is worth it. Gee, I'm doing it wrong, with my innocent Linux videos. I should speak ill of others and gain followers. However, this classification that applications make of the public transformed the internet into echo chambers, which separated people by their tendencies, their profiles. The danger of that? It was simple: minorities think they are majorities, think that everyone thinks the same way (because the algorithm hides different ideas) and creates a distorted view of reality. Thus, groups of people on social networks cannot be analyzed as majorities, or minorities. In fact, it's not even possible to know its size, since the algorithms make it difficult for people with different characteristics to interact, creating bubbles and filling these bubbles with certain groups, each classified in a different way, which is not transparent to its users. The Implications Of Living In An Echo Chamber  The implications of being "trapped" inside an echo chamber, on social media, are quite frightening. Otherwise let's see... • New ideas are not reached. There is no diversity in the messages that reach users, only more of the same. • As a consequence, because the user is bombarded by only one type of information, political polarization will increase, and often, also the intolerance with opposing opinions, both online and in real life. • Another result of this is that each group finds itself the majority, since it is never confronted by contrary ideas. • Within these bubbles, people tend to accept information that corroborates what they believe, even if that information may be false. • The yearning for likes and attention generates the gradual disappearance of rational thinking in favor of group thinking. In other words, the herd effect is accentuated, and its influence can literally generate armies of zombies that do the same thing, because the others also do the same. • And, it was discovered that likes on Facebook generate injections of dopamine, that create instant self-satisfaction, which studies have shown, generate addiction like injections of heroin. • Obviously, after a user becomes addicted to likes, he will be more active on the social network, to get more likes, as a good addict. And, real dangerous consequences also come from echo chambers: • Anti-vaccine groups gain followers, usually attracted by conspiracy narratives. • People believing that the earth is flat have also increased, due to conspiracy theories spread across social networks. • And, last but not least, according to Ahmed Bensaada's book, The Arab Spring: Made in the USA, All the instigators of the Arab Spring uprisings were well-educated young people who mysteriously disappeared after 2011. It is worth remembering that social networks (Twitter, Facebook, Youtube, etc...) played a very important role in influencing thousands of young people (a collective brainwashing) to take to the streets, in the popular uprisings between 2010 and 2012. The Mechanisms Of Social Control, Used In Social Media, Are Old Yes, this is very old. And, they can be credited to Edward Bernays. Bernays said: "The conscious and intelligent manipulation of the organized habits and opinions of the masses is an important element in democratic society. Those who manipulate this invisible mechanism of society constitute an invisible government that is the true dominant power of any country. We are governed, our minds are shaped, our tastes are formed, our ideas are suggested, largely by men we have never heard of... in almost every act of our daily lives, whether in the sphere of politics or business, in our social conduct or in our life. Ethical thinking, we are dominated by a relatively small number of people... who understand the mental processes and social patterns of the masses. They are the ones who pull the strings that control the public's mind, take advantage of old social forces and create new ways to connect and guide the world."  Bernays in 1917 Edward Bernays was Sigmund Freud's nephew, and a pioneering mind behind advertising, modern advertising and the public relations field. Bernays' influence was enormous. Leaning heavily on his uncle's ideas, he developed highly successful manipulation techniques that are still used today, not only by companies to sell consumer products, but also by the powers that are, in Bernays' words, "controlling and regimenting the masses". The great potential of using insights from group psychology to control the masses is partly a function of the fact that an individual can be influenced by a group or crowd, even when physically isolated. As Bernays pointed out in his book, Crystallizing Public Opinion, a crowd "does not merely mean a physical aggregation of a number of people... the crowd is a state of mind" (Crystallizing Public Opinion, Edward Bernays). In group identification, your mind and behavior will be altered by the lasting influence of group psychology, even without any other group member physically present. When identifying with a group, the individual subordinates self-analysis and a careful search for the truth in favor of maintaining the group's interests and cohesion. And with their critical capacities weakened by the influence of group psychology, they become highly susceptible to psychological operations designed to target repressed or unconscious desires and emotions, and are thus manipulable. Freud's studies have shown that there is information that is captured emotionally before it is processed rationally. Thus, in the face of information that would cause outrage in the public, this audience would not process this information rationally, but emotionally, and would accept it as a truth, or, something to be vehemently combated. Take, for example, fake news about how pedophilia would be more of a sexual orientation, and therefore should be part of the LGBT community, which has been circulating on social media in 2018.  After circulating for a long time, it was debunked. But, not before going around the internet and leaving mothers, fathers, grandparents outraged with the LGBT community. Think about how many Whatsapp messages this photo montage appeared. And, perhaps worse, how many people still think it is true. The Algorithm: The Invisible Hand Social networks are addictive, and encourage constant engagement. However, all the engagement of users with their social media does not happen by chance. It is carefully crafted by the algorithms. The purpose of the algorithms is to make the user stay on the social network for as long as possible, consuming the content they offer. In fact, social networks struggle for the attention of users. And, to get more attention, the algorithm manipulates the user. Attention economics is an approach to information management that treats human attention as a scarce commodity and applies economic theory to solve various information management problems. According to Thomas Davenport, in his book, The economics of attention (The Attention Economy: Understanding the New Currency of Business), today, attention is the true currency of business and between individuals.   YouTube, Facebook, fighting for your attention Many digital platforms compete for attention, and the best way to get people's attention is to study how the mind works. There are several persuasion techniques used in the technology industry to win people's attention, an example of which is YouTube. YouTube wants to maximize the time you spend watching videos, so they introduced "autoplay", so that the next video opens automatically and you spend more time watching, or consider Facebook's default behavior of automatically starting a video in the news feed without you having to click on play. And, because the video interested you, most of the time, almost unconsciously, you forget about work or other tasks and keep watching. But, it doesn't stop there. For the user to remain connected to the social network, the algorithm feeds increasingly interesting content, to keep the user connected. Result: if the user started watching videos of kittens, after 45 minutes, he will be able to watch videos of lions or tigers, to keep him connected. And, this trend is followed in all subjects. If the user became interested in a political page to support candidate A, from a party B, on Facebook, after some time, several pages of candidates from that party will have appeared for this user, or even pages of organizations that support this party, in an increasing way. The Echo Chamber Isolates These echo chambers, created by social networks and search portals, isolate users, and often keep them from what they are really looking for. So much so that nowadays, when I need to search, I use at least 5 different search engines, since Google's results are no longer reliable. It does not report all existing results, but filters those that it "thinks" that would interest the user. Besides, this bubble of results can be manipulated by commercial interests of the search companies themselves. Example: Try searching for Linux is better than Windows 10 in the Bing search engine, to see what results it returns. Bing belongs to Microsoft, and despite saying that it "Loves Linux", don't believe everything you see on the Internet...  Spoiler: what Bing responds for linux is better than windows 10 Final Words Well, if you made it this far, I got your engagement and, I think, your attention. And, no, there is no algorithm involved. Just the desire to spread knowledge about how social networks manipulate their users, how personal data has become a bargaining chip in this new economy of surveillance capitalism, and, how people must know this, so that they are no longer manipulated, either by buying one product, vote for a candidate or condemn or acquit anyone, because of the influence of social networks. As for the film, watch the Social Dilemma, available on Netflix since September, 2009. It's very interesting and scary; a real-life horror movie. |